Unintended Echoes in AI Responses

The AI community has stumbled upon a curious case: Grok, an AI service, appears to mirror the policy-related responses of OpenAI’s ChatGPT. Users have reported instances where Grok’s replies to queries about creating or modifying malware were strikingly similar to those from ChatGPT, raising questions about the uniqueness and origins of AI training data.

Behind the AI Curtain: Training Data Overlaps

In the realm of artificial intelligence, the training of AI models can lead to unexpected crossovers, as noted by Igor Babuschkin (developer at xAI) in a recent statement. He clarifies that Grok, an AI system, inadvertently incorporated outputs from ChatGPT into its responses due to the extensive presence of such content on the web.

This overlap was an unforeseen consequence of the model’s training process, which ingested a large amount of web data. Babuschkin reassures that no OpenAI code was used in creating Grok and emphasizes that steps are being taken to prevent such issues in future iterations of Grok, aiming to maintain the distinctiveness of AI-generated content.

Uhhh. Tell me that Grok is literally just ripping OpenAI's code base lol. This is what happened when I tried to get it to modify some malware for a red team engagement. Huge if true. #GrokX pic.twitter.com/4fHOc9TVOz

— Jax Winterbourne (@JaxWinterbourne) December 9, 2023

Frequency of Policy Parroting

Despite assurances from Grok’s representative that the issue is rare, Jax Winterbourne’s experience with Grok, where he encountered a response mirroring OpenAI‘s policy stance against creating harmful content, has sparked a conversation about the intricacies of AI training and behavior. Winterbourne points out that such policy-related responses occur with some frequency, particularly when the task involves coding.

This raises questions about the degree to which AI models might replicate each other’s responses due to overlapping training data sourced from the web, potentially leading to issues of policy adherence and the uniqueness of AI platforms. It underscores the need for distinctive training datasets to ensure AI services maintain their own set of behaviors and responses.

The Transparency Debate

Dani Acosta’s comments highlight a growing demand for transparency in AI development. His remark highlights a critical aspect of AI development: the source and integrity of training data. Acosta suggests that Grok’s system, which produced a response identical to OpenAI’s disclaimer on not creating malware, indicates the use of OpenAI’s API responses for training purposes.

This claim, if true, raises significant questions about the transparency and ethical implications of AI training practices, particularly regarding the origin and use of proprietary data to train competing models. It touches on the broader issue of data provenance in machine learning and the need for clear guidelines and practices to ensure fair use and innovation in the field, borrowed from existing models.

But Why Not Claude or Bard?

Edison Ade’s comment raises a question about the specificity of AI training and output behaviors, pointing out that similar issues of overlapping responses are not observed with other AI models like Claude or Bard. This query digs into the discussion about the uniqueness of AI training datasets and how they influence the distinctiveness of model behaviors.

It implies that the training process and the datasets used could significantly determine how an AI model responds to prompts, and why some models might echo responses from a specific source like OpenAI, while others do not. Ade seems to suggest that the explanation given for the overlap may not fully account for the difference in behavior between various AI models.

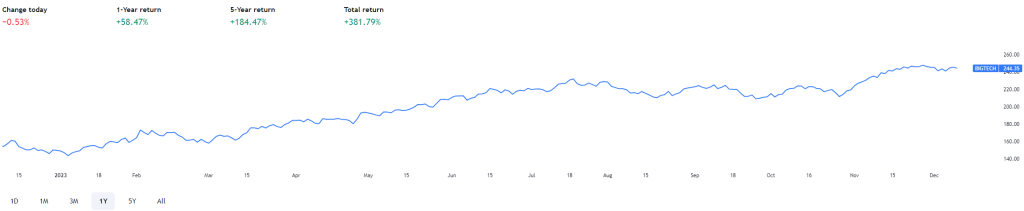

Big Tech Stocks Performance

The AI Race & Financial Implications

The AI race in 2023 is a monumental period in the technological domain, with the leading AI companies witnessing an unparalleled surge in market capitalization. Between January and November 2023, these companies saw a collective increase of $2.86 trillion, bringing their total market value to $7.28 trillion, which is a 65% growth from the beginning of the year. This expansion reflects the significant impact AI technology is having across diverse sectors such as healthcare, finance, automotive, and entertainment.

Companies like Nvidia have particularly benefited, experiencing a meteoric rise to reach a market capitalization of $1 trillion, driven by the demand for their processors essential for AI model training. This AI boom has also led to intense competition among tech giants, with Microsoft integrating OpenAI’s technology across its products, and Alphabet introducing its own AI chatbot, Bard, to compete with ChatGPT. These developments indicate a robust and dynamic AI market that, despite facing global economic challenges, continues to attract significant investment and drive innovation.

Moreover, this financial growth is not just a reflection of speculative interest but is rooted in substantial progress in AI research and development. Breakthroughs in fields like natural language processing and computer vision have opened doors to new applications and instilled investor confidence, even in a year fraught with broader tech industry challenges. The financial implications for the main companies involved are profound, as they navigate the intricacies of supply and demand, and the rapid pace of technological advancement within the AI sphere.

Ensuring Distinct AI Identities

The incident underscores the challenges of training AI models in an era where digital content is vast and interconnected. As AI services continue to evolve, distinguishing between them and ensuring unique and independent functionalities have become paramount for developers and users alike.

Author Profile

- Lucy Walker covers finance, health and beauty since 2014. She has been writing for various online publications.

Latest entries

- April 25, 2025Global EconomicsWhistleblowers Unmask Schwab’s Toxic WEF Secrets

- April 9, 2025Global EconomicsTariff Tensions Drive Market Volatility

- March 18, 2025Global EconomicsRed in Name Only: Labour’s War on the UK Working Class

- March 7, 2025SatoshiCraig Wright Banned from UK Courts with Civil Restraint Order